VoyagerSight

Replication and extension study on Nvidia’s groundbreaking Voyager, exploring the effects of multimodal inputs on Minecraft LLM agents

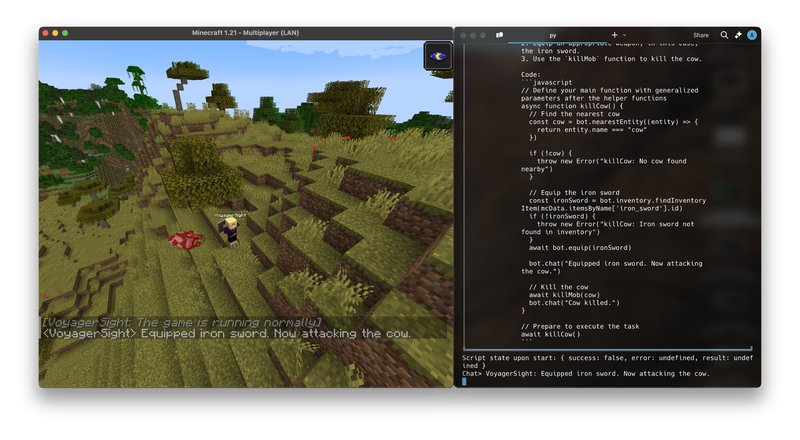

VoyagerSight is a research replication and extension of NVIDIA’s Voyager paper, integrating multimodal perception capabilities into autonomous Minecraft agents. Powered by modern LLMs with faster inference and enhanced multimodal capabilities, this implementation tests the hypothesis that agents with multimodal perception will perform more effectively and also display diverse behavior and creativity.

Technologies Used:

Full project documentation, code, and paper coming soon!